Are You Ready for the Coming Age of Mass Genius?

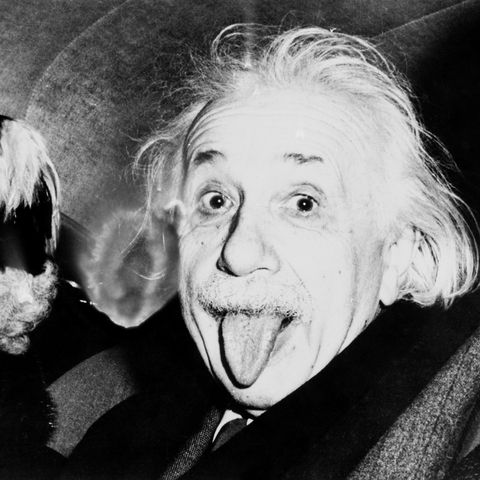

Some tech experts believe the intelligence of the human race is about to skyrocket. Some of you, we know, are thinking: “And not a moment too soon!”

What would account for this ballistic bulge in bubba’s brainpower?

Peter Diamandis thinks he knows. Diamandis holds degrees in molecular genetics and aerospace engineering from MIT, and made his reputation as the best-selling author of Abundance: The Future Is Better than You Think. He says the growth of internet connectivity, the cloud, and maturing brain-computer interfaces will bring dramatic acceleration of mass genius. This includes both individual and collective intelligence. Not only will the world at large become smarter, each of us will become a genius.

Mass Genius through Connectivity

The first factor Diamandis cited is connectivity. For most of history, he said, the greatest intellects have been squandered. Many were hindered by barriers of sex, race, ethnicity, class, and culture. Most, though, simply lacked means to communicate their insights to the world.

The coffee houses founded in eighteenth century Britain and continental Europe played a critical role in destroying these barriers. In the coffee houses, people from all classes and vocations met to discuss ideas, debate them, and refine their own ideas based on the feedback they got from others. The intellectual ferment in the coffee house culture fostered the Enlightenment and the Industrial Revolution.

Concentrating population in large urban centers extended the idea generating power of the coffee house to many more people.

Diamandis says the internet is our current version of the eighteenth century coffee house and the urban center– but is many times more powerful than both. Our current networks need not be confined to our neighborhoods or our cities; they can now encompass the entire globe. More than four billion people now have internet connections. Soon all of us will.

The Cloud and Brain-Computer Interfaces

The second factor, Diamandis says, is the cloud, which will be enhanced by brain–computer interfaces. The author says we will soon be able to upload our thoughts to the cloud, and download information directly to our brains. We then can bypass the usual cumbersome learning process. Research will become more efficient by several orders of magnitude, because it will be rooted in what Diamandis calls “the neurological basis for innovation”.

Is Diamandis right about this? We should certainly hope so. We wouldn’t be burdened with so many selfies or cat videos on social media. We might even hear Joy Behar or Barbra Streisand say something sensible.

To tap your own genius, you need a reliable internet connection. For the one that works best for you, call Satellite Country. We can help.